At Netlify, we’re big fans of Linear. Across the company, there are so many projects going on, it’s hard to get a sense of where things are and what might need more attention. To solve this, we spent a little time creating an AI system that will generate project summaries over time, and every week it will send the team an “executive summary” view of all of the projects that have been updated. This guide will walk you through how to build this system using Linear, Netlify, and Anthropic.

While this usecase is specific to Linear, this pattern (webhook for data, process data with AI, and then generate useful views of the data) is applicable to countless more usecases.

#TL;DR

Use the power of AI to summarize all project updates and provide a high level executive summary for your team to follow along.

Deploy your own

#What we’re building

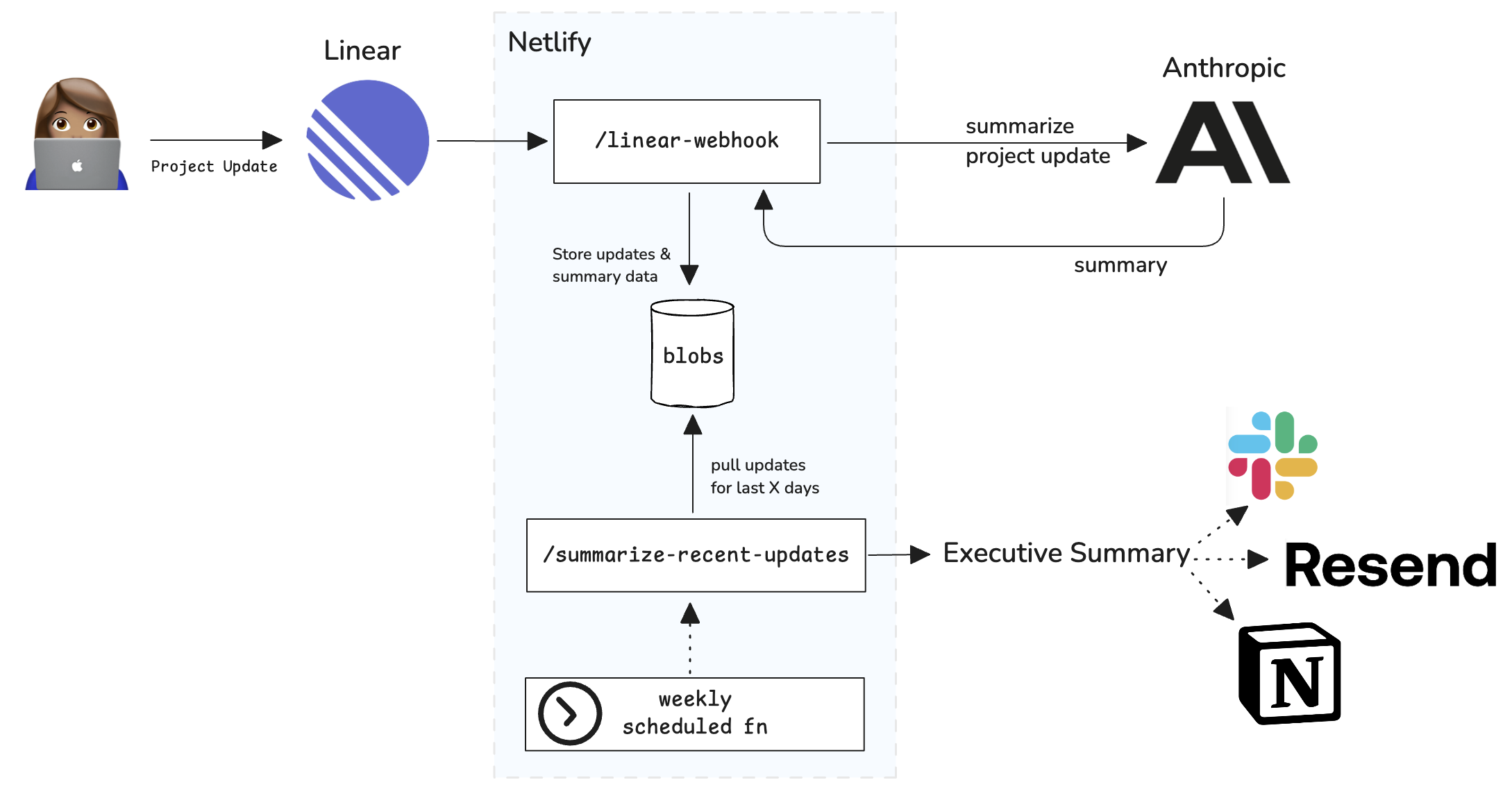

The system we’re building will have a webhook to receive Linear project updates. It will use Anthropic’s Claude models to summarize each update and then store the contents in Netlify Blobs. It will have another endpoint that will compile an executive summary that can be sent or stored on any needed system. This second endpoint can be called on-demand or via a scheduled function to do produce executive summaries on a recurring interval.

#Getting started

To get set up, let’s get the API keys and overhead out of the way.

-

Deploy this example project, Create a new Netlify site, or follow this guide with an existing site.

-

Create Linear Webhook. Create a webhook for Project Updates and store the webhook Signing Secret in the site’s environment variables with the key

WEBHOOK_SECRET. The webhook URL will be the site’s domain +/linear-webhook -

Create an Anthropic account and generate a new API key. Store this API key in the site’s environment variables with the key

ANTHROPIC_API_KEY -

Add the env var

INTERNAL_API_KEYto the site’s environment variables. The value can be arbitrary and meant to authenticate requests for the endpoints this guide will set up. The API endpoints can all be called with anx-api-keyheader that matches this value to authenticate the request. -

Install the necessary dependencies:

Terminal window npm i --save @anthropic-ai/sdk @netlify/blobs @netlify/functions

#Creating the Linear Webhook

Start by creating a new serverless function in the site’s functions directory.

In this function we will add the following logic:

import type { Context } from "@netlify/functions";import { createHmac } from "node:crypto";import { summarizeUpdate } from "./utils/ai.mts";import { addNewUpdateToLedger, deleteLinearUpdateSummary, storeLinearUpdateSummary } from "./utils/storage.mts";

export default async (request: Request, context: Context) => { const payload = await request.text(); const { action, data, type, url } = JSON.parse(payload);

if (context.deploy.context === "production") { // for production, verify the linear signature const signature = createHmac("sha256", Netlify.env.get("WEBHOOK_SECRET") || "") .update(payload) .digest("hex"); if (signature !== request.headers.get("linear-signature")) { return new Response(null, { status: 400 }); } } else { // non production can have a simple API key check if (Netlify.env.get("INTERNAL_API_KEY") !== request.headers.get("x-api-key")) { return new Response(null, { status: 400 }); } }

if (type.toLowerCase() === "projectupdate") { const { id, createdAt, body } = data;

if (action === "create" || action === "update") { // use AI to summarize the update. const summary = (await summarizeUpdate(body)) || body;

await storeLinearUpdateSummary({ id, projectUrl: url, update: data, summary, });

// append this project update to the ledger. // the ledger will be used to identify the right updates within a time range if (action === "create") { await addNewUpdateToLedger({ id, createdAt }); } } else if (action === "remove") { // don't include updates that have been removed in linear await deleteLinearUpdateSummary({ id }); } }

// tell linear, all is good return new Response(null, { status: 200 });};

export const config = { path: "/linear-webhook",};This function will do a few key things:

- Authenticate the request. Linear provides a means to validate the Linear webhook API call.

- When webhook calls happen to Create or Update project updates…

- Summarize the Linear project update data (we’ll cover this more below)

- Store the update data and the generated summary into Netlify Blobs

- Add all new project updates into a ledger file. This is just a JS object (stored as JSON) where we can track the project updates and their creation date.

- When webhook calls remove project updates. It will delete them from Netlify Blobs.

#AI Summarization

In this guide, we’re leveraging Anthropic’s SDK to summarize each update quickly. Anthropic’s SDK and their incredibly advanced Claude family of models make this entire process incredibly easy to get exactly the results we are looking for.

import Anthropic from "@anthropic-ai/sdk";

export async function summarizeUpdate(text: string) { const anthropic = new Anthropic({ apiKey: Netlify.env.get("ANTHROPIC_API_KEY"), });

const prompt = `The user will provide an update about an active project. Any unknown reference is a reference to the project itself. Create an executive summary of the information in less than 3 sentences.`;

const msg = await anthropic.messages.create({ model: "claude-3-5-sonnet-20240620", max_tokens: 2048, system: prompt, messages: [{ role: "user", content: text }], });

return msg.content.find((content) => { return content.type === "text"; })?.text;}The prompt we’re using here informs the LLM, Claude 3.5 Sonnet, how we need to summarize each project update. The code provides a system prompt that informs the LLM to keep the summaries less than 3 sentences. In building out this solution internally, we found that asking the model to keep the information short also removes the superfluous “chatty” type of information that conversational models tend to produce.

This function took about 5 minutes to build and verify everything worked as expected. So, while you can use any AI system to produce these patterns, Anthropic’s Claude should be high on your list to use or try in your workloads.

#Storing updates and the update ledger

Netlify Blobs makes it incredibly easy to store data for websites. We’re taking advantage of that capability but installing the Netlify Blobs client and using it - no extra API key needed or set up new permissions step. We’re using the pattern where we will use deploy-specific storage for non-production and global storage in production. This allows us to iterate from an empty storage state on our development branches and testing.

import type { Context } from "@netlify/functions";import { getDeployStore, getStore } from "@netlify/blobs";

const LINEAR_STORE_NAME = "linear-updates";

function getBlobsStore(storeName: string) { const context = Netlify.context as Context; const options = { name: storeName, consistency: "strong" } satisfies Parameters<typeof getStore>[0]; if (context.deploy.context === "production") { return getStore(options); } else { return getDeployStore(options); }}

export async function storeLinearUpdateSummary({ id, projectUrl, update, summary,}: { id: string; projectUrl: string; update: any; summary: string;}) { // store the update so we can pull it later. return getBlobsStore(LINEAR_STORE_NAME).set( `updates/${id}.json`, JSON.stringify({ update, summary, projectUrl }, null, 2) );}

export async function addNewUpdateToLedger({ id, createdAt }: { id: string; createdAt: string }) { const store = getBlobsStore(LINEAR_STORE_NAME); const currentLedger = (await store.get(`update-ledger.json`)) || "{}"; const ledger = JSON.parse(currentLedger) as Record<string, number>; ledger[id] = new Date(createdAt).getTime(); await store.set(`update-ledger.json`, JSON.stringify(ledger, null, 2));}

export async function deleteLinearUpdateSummary({ id }: { id: string }) { return getBlobsStore(LINEAR_STORE_NAME).delete(`updates/${id}.json`);}

export async function getLinearUpdateSummaries({ days }: { days: number }) { const currentDay = new Date().getTime(); const lowerBoundaryTime = currentDay - days * 24 * 60 * 60 * 1000;

const ledger = JSON.parse((await getBlobsStore(LINEAR_STORE_NAME).get(`update-ledger.json`)) || "{}");

const summaryIds = Object.keys(ledger).filter((id) => ledger[id] > lowerBoundaryTime);

const summaries = await Promise.all( summaryIds.map(async (id) => { const summary = await getBlobsStore(LINEAR_STORE_NAME).get(`updates/${id}.json`); return summary && JSON.parse(summary); }) );

return summaries;}This code is putting all of our Linear updates into a Blob store called linear-updates . Storing updates there means we will have the Blobs stored at the path linear-updates/{id}.json . Namespacing under a data specific store name allows us to isolate data from other data we might use on the site and we can manage this data knowing it’s all under this prefix.

Because the system will be generating executive summaries for a time ranges, we need a way to keep track of all of the updates in the system and when they happened. There are a few ways to go about this. For this guide, we went with storing the data as a JSON object to represent a simple ledger. Each entry has a key for the project update ID and the value is the time when the project update was created in Linear. This simplified what the system looks for when filtering updates in the other endpoint.

#Generating Executive Summaries

Once we’ve established the webhook, Linear will call that endpoint as the team provides updates to Linear projects over time. Now we will start to do something useful with that information. Let’s add another serverless function to generate the executive summaries.

import type { Context } from "@netlify/functions";import { getLinearUpdateSummaries } from "./utils/storage.mts";

const healthMap = { "offTrack": { icon: "🔴", dialog: "off track", sort: 0, }, "atRisk": { icon: "🟡", dialog: "at risk", sort: 1, }, "onTrack": { icon: "🟢", dialog: "on track", sort: 2, },};

export default async (request: Request, context: Context) => { // non production uses a simple API key check if (Netlify.env.get("INTERNAL_API_KEY") !== request.headers.get("x-api-key")) { return new Response(null, { status: 400 }); }

const parsedURL = new URL(request.url); const days = parseInt(parsedURL.searchParams.get("days") || "7");

const summaries = await getLinearUpdateSummaries({ days });

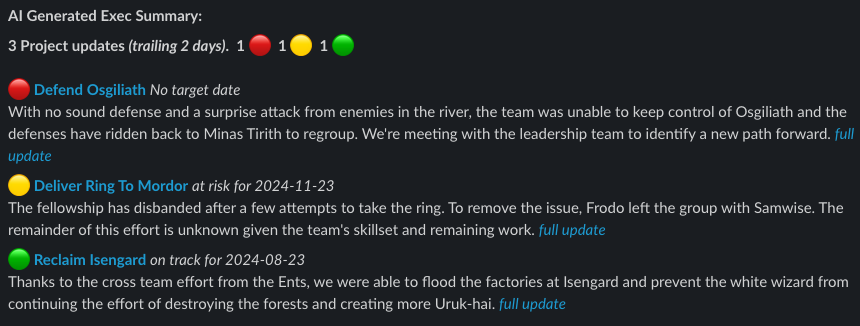

// count all by current health const numRed = summaries.filter((s) => s.update.health === "offTrack").length; const numYellow = summaries.filter((s) => s.update.health === "atRisk").length; const numGreen = summaries.filter((s) => s.update.health === "onTrack").length;

// Build up the update content for the summary const execSummary = `*AI Generated Exec Summary*:${summaries.length} Project updates _(trailing ${days} days)_. ${numRed ? ` ${numRed} 🔴 ` : ""}${ numYellow ? ` ${numYellow} 🟡 ` : "" }${numGreen ? ` ${numGreen} 🟢` : ""}

${summaries .sort((a, b) => { // order the updates by health to surface the // most at risk plans first return healthMap[a.update.health].sort - healthMap[b.update.health].sort; }) .map((s) => { const { update, projectUrl, summary } = s; const { health, project, slugId, infoSnapshot } = update;

const towardTarget = infoSnapshot.targetDate ? `${healthMap[health].dialog} for ${infoSnapshot.targetDate}` : "Missing target date";

return `${healthMap[health].icon} *[${project.name}](${projectUrl})* _${towardTarget}_${summary} _[full update](${projectUrl}#projectUpdate-${slugId})_`; }) .join("")} `;

return new Response(execSummary, { status: 200 });};

export const config = { path: "/summarize-recent-updates",};This serverless function will be at {site_domain}/summarize-recent-updates and will accept a query parameter of days which is the integer number of days in the past to get summaries for. It can be called directly and will return the templated string. This gives us an API endpoint to get this information for many different use cases.

With the function, there are 2 key pieces of work 1) Fetching all of the summaries for the trailing N days and 2) combining all of the data into an executive summary. Since the webhook function generated the succinct summaries of each project update when the webhook is called, we don’t have to invoke the LLM again unless we want to do more generative work with it. This will reduce a lot of the work/time this function will have to do.

Under the hood of the getLinearUpdateSummaries , it’s loading the full ledger which has the update ID’s and the update creation time. It will find all of the updates that have a creation time between now and the number of days passed in the query parameter.

Finally, it will generate the executive summary using a template literal string. For this template, it’s going to identify the number of top level information like the number of updates and group their statuses. Finally, it will loop through the projects and add the summaries and relevant information.

When there are relevant project updates, the response will the markdown string that, when rendered, will look like the following:

#Scheduling

We now have the webhook that Linear sends data to and the API endpoint that can generate the executive summary whenever it’s needed. The final part of this automation is to set up a scheduled function in Netlify and send this information to a destination.

Just like other functions, I simply add the scheduled function to the functions directory and set a schedule property instead of a path property in the config. These schedules are cron syntax and the one in this example is for every Monday at 12am. That’s it!

import { type Context } from "@netlify/functions";

export default async (request: Request, context: Context) => { const execSummaryUrl = new URL(context.site.url || Netlify.env.get("URL") || ""); execSummaryUrl.pathname = "/summarize-recent-updates"; execSummaryUrl.searchParams.set("days", "7");

const execSummary = await fetch(execSummaryUrl, { headers: { "x-api-key": Netlify.env.get("INTERNAL_API_KEY") || "", }, });

if (!execSummary.ok) { console.error("failed to fetch exec summary", execSummary.status); return; }

const execSummaryText = await execSummary.text();

// do something with the summary! console.log({ execSummaryText });

// Send to Slack, Email, store it in blobs, etc. now that you have // the summary you can send it to your team's communication tool of choice};

export const config = { schedule: "0 0 * * 1", // run every Monday at 12am};The logic pulls the executive summary from the summarize-recent-updates endpoint we created before.

#Taking this forward

This guide has broken down all of the steps needed to automate creating executive summaries of project updates from Linear using AI via Anthropic’s Claude models. The next step is delivering these summaries to your team! With the markdown, you can send this summary to Slack, use Resend to create emails, create a new page in Notion, or send it to any other tool your team prefers. Sending data to another system can happen in the summarize-recent-updates endpoint or within the recurring scheduled function. What’s important is to deliver this information in the way your team prefers to communicate and collaborate.

This pattern of summarizing data over time and providing an aggregate view to your team is such a powerful pattern for so many usecases.

Want to get started? Try it yourself!